Introduction

Imagine a hospital that certifies its nurses not through theory exams but by observing their ability to insert IV lines, respond to emergencies, and communicate clearly with patients. This is the essence of Competency-Based Assessment (CBA), which involves shifting from what people know to how well they can apply knowledge, skills, and behaviours in real-world settings.

However, as we move into 2025, organisations and educators are increasingly embracing CBA. It promises more authentic learning and measurable workplace skills. Yet, implementing it effectively requires thoughtful design, evidence-based practice, and a willingness to confront both benefits and limitations.

This guide brings together definitions, examples, challenges, and recent studies, while also providing FREE templates and checklists to help you design your own competency frameworks.

Table of Contents

- Introduction

- What is Competency-Based Assessment?

- Why CBA Still Matters Today

- Competency-Based Assessment vs. Traditional Assessment

- Key Components of a Strong CBA Framework

- Designing and Implementing Competency-Based Assessment

- Examples Across Industries

- Benefits of Competency-Based Assessment

- Challenges and How to Address Them

- Best Practices for 2025

- FAQs

- Conclusion

- References

What is Competency-Based Assessment?

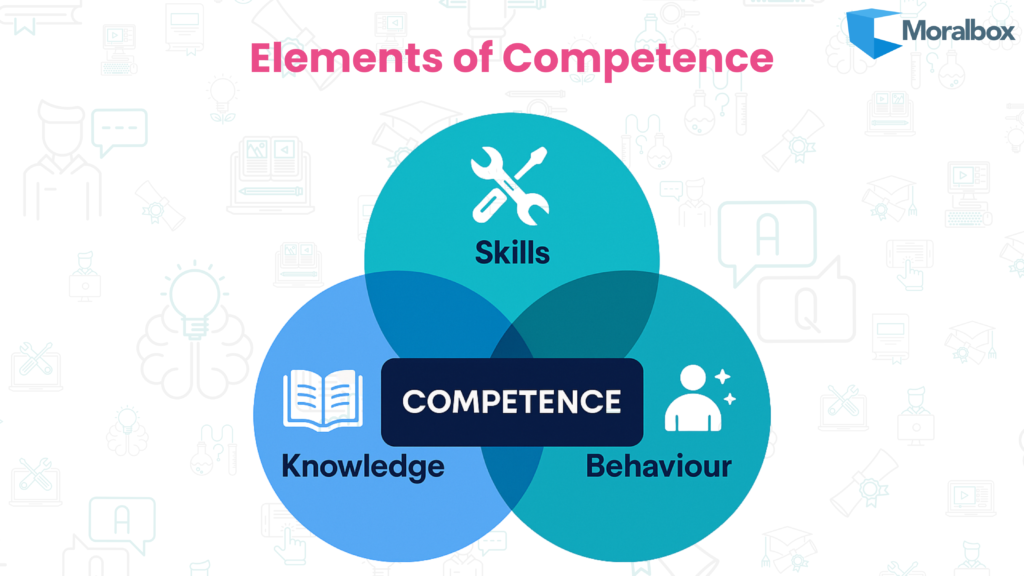

Competency-Based Assessment (CBA) measures whether a person can demonstrate defined competencies, not just theoretical knowledge but the integration of skills, judgement, and behaviour. The emphasis is on observable performance in context.

For instance, a project manager might be tested not on memorising methodologies but on their ability to lead a team through a real or simulated project, resolving conflicts along the way. In this way, CBA produces evidence that individuals can apply learning in situations that mirror their professional or educational environments.

Figure 1: Venn Diagram of Elements of Competence

Why CBA Still Matters Today

- A shift towards skills-first education and work

Employers are increasingly prioritising evidence of capability over formal qualifications. A university degree may open doors, but organisations want assurance that new hires can perform tasks effectively. Similarly, education providers are under pressure to ensure students graduate work-ready.

- Compliance and safety requirements

In high-stakes industries such as healthcare, aviation, and construction, assessment of competence is not optional. Indeed, regulators require organisations to prove that staff are capable of carrying out tasks safely and consistently. As a result, a simple written exam cannot provide that assurance. - Backed by recent studies

A 2023 study of 303 undergraduates showed that when competency-based approaches were paired with formative feedback, students significantly improved soft skills such as teamwork and adaptability (Alt et al., 2023). However, the same study found that competency frameworks alone had limited effect without feedback, highlighting the need for assessment as part of a continuous learning cycle, not just a one-off test.

In higher education, a multi-university study of Spanish educators found growing use of competence rubrics yet uneven assessment practice, underscoring the need to build assessor capability and design quality (Velasco-Martínez and Tójar-Hurtado, 2018). In other words, policies alone are insufficient without proper implementation.

Finally, a 2025 article on authentic assessment warned that if poorly designed, CBA risks producing “surface-level” tasks that look realistic but fail to capture true capability (Fawns et al., 2025). Thus, design quality is just as important as intent.

Competency-Based Assessment vs. Traditional Assessment

| Aspect | Traditional Assessment | Competency-Based Assessment |

|---|---|---|

| Focus | Recall of knowledge | Application of skills and judgement |

| Method | Exams, essays, multiple-choice | Observation, simulations, portfolios |

| Outcome | Grades or pass/fail | Evidence of proficiency |

| Feedback | Often one-off, limited | Ongoing, developmental |

While traditional assessment remains useful for testing foundational knowledge, it does little to confirm whether learners can adapt knowledge to real situations. Therefore, CBA bridges this gap by aligning evaluation with authentic performance.

Key Components of a Strong CBA Framework

Using only one type of evidence risks bias or superficiality. Instead, strong frameworks combine direct observation, reflective portfolios, peer input, and even digital performance data.

- Defined Competencies

These should be role-specific and clearly describe what capability looks like. For example, “communicates effectively with patients” is more actionable than “good communicator.” - Observable Behaviours

Competence must be demonstrated through visible actions. For instance, in leadership, observable behaviours might include delegating tasks clearly or facilitating team discussions. - Performance Indicators

Levels of competence (e.g., novice, proficient, advanced) help standardise expectations. This ensures assessments are not reduced to yes/no judgments but reflect a continuum of growth. - Multiple Evidence Sources

Using only one type of evidence risks bias or superficiality. Strong frameworks combine direct observation, reflective portfolios, peer input, and even digital performance data. - Feedback Cycles

Competence is not static. Regular feedback allows learners to reflect, adjust, and improve, making assessment part of an ongoing development process rather than a gatekeeping exercise.

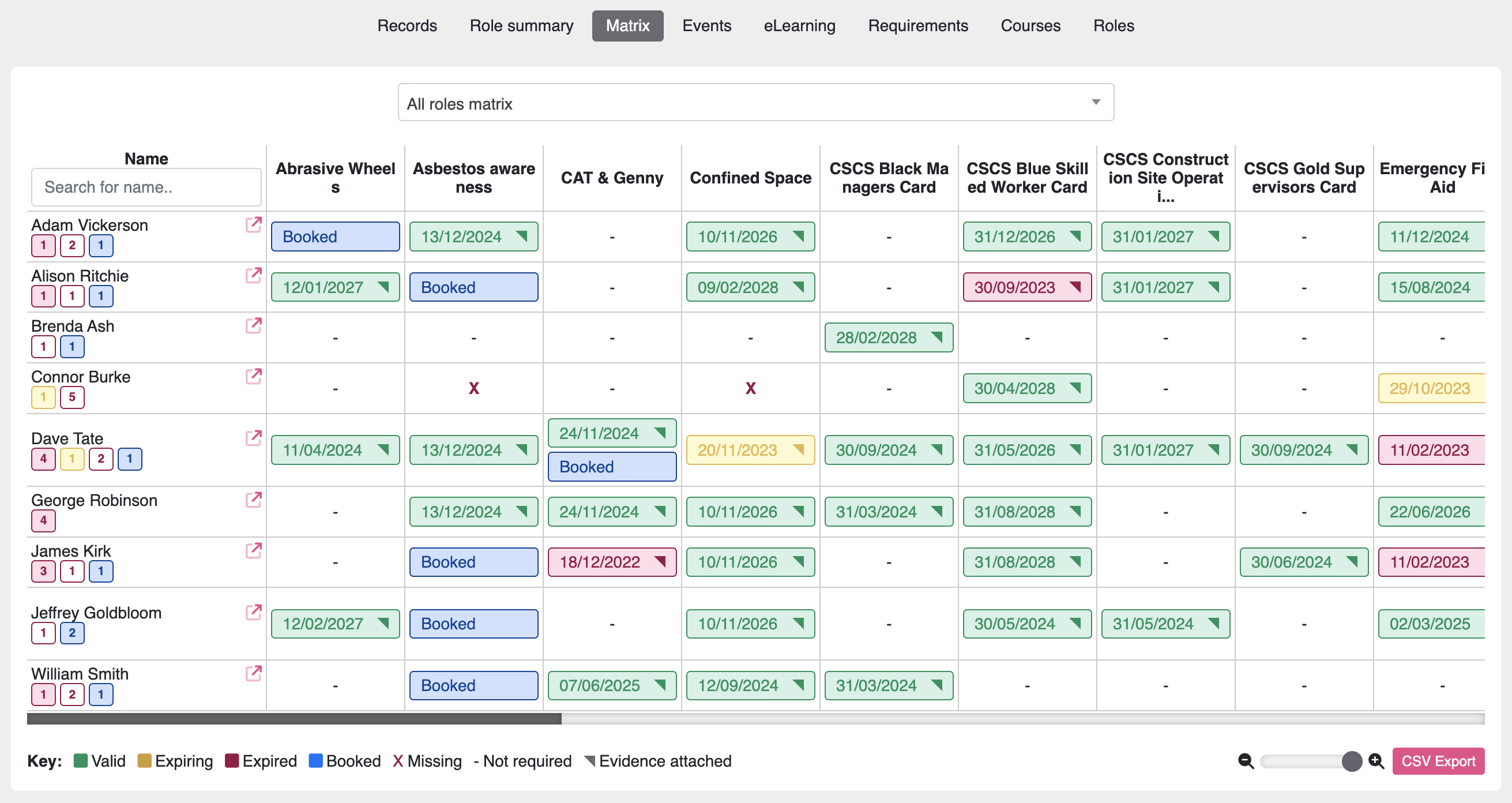

Here’s a sample Competency Matrix Template (Excel) showing how behaviours, indicators, and evidence can be mapped.

Designing and Implementing Competency-Based Assessment

Building a CBA system requires more than simply writing competencies into a policy document. It is an iterative process that should include:

- Engaging stakeholders. Involving managers, educators, and learners in the design phase ensures relevance and buy-in.

- Breaking competencies into measurable behaviours. Vague competencies (“leadership skills”) should be translated into practical markers (“can mediate disputes fairly”).

- Choosing appropriate assessment methods. High-risk tasks may require simulation; softer skills may benefit from peer review or reflective exercises.

- Training assessors. Without calibration and shared understanding, two assessors may score the same performance very differently.

- Piloting and reviewing. Rolling out small pilots allows organisations to gather data, adjust criteria, and scale gradually.

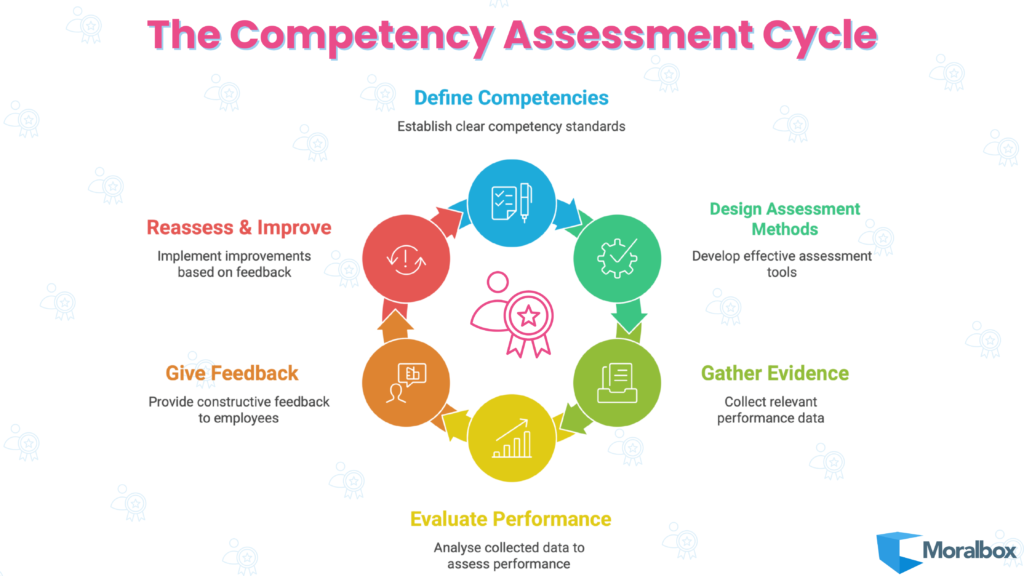

Figure 2: Circular Flow of the Competency Assessment Cycle

Examples Across Industries

Competency-Based Assessment is versatile. Because it measures real performance in context, it can be applied in regulated sectors, corporate learning, and education alike. Across industries, the same principle holds true: competence is proven not by theory alone, but by what people can reliably do.

- Healthcare: Nurses assessed on infection-control practices during patient care. Competence is judged by consistent adherence to protocols, not just memorising them.

- Aviation: Pilots tested through flight simulators that recreate crisis scenarios. What matters is decision-making under pressure, not theoretical recall.

- Corporate Training: Managers evaluated on leading conflict-resolution sessions, where both process and outcome provide evidence of capability.

- Education: Students assessed through project-based tasks, demonstrating how they apply theory to practical, real-world challenges.

These examples show that CBA can be applied in high-stakes professions, leadership development, and even classroom settings.

These approaches also connect with how individuals prefer to learn. For example, understanding Honey and Mumford’s Learning Styles can help educators design competency assessments that suit diverse learners.

Benefits of Competency-Based Assessment

- Relevance: Aligns learning with tasks employees or students will face in practice.

- Compliance: Provides auditable records that satisfy regulators and accreditation bodies.

- Development: Encourages learners to see assessment as part of growth, not just a hurdle.

- Return on investment: Organisations can track workforce readiness and identify training gaps.

- Confidence: Learners gain assurance that they are meeting professional standards.

Each of these benefits depends on well-designed systems, which is why CBA is often most successful when supported by digital tools that streamline evidence collection and reporting.

Challenges and How to Address Them

- Time and resources. Designing frameworks and training assessors takes investment. Starting with small pilots helps manage this.

- Assessor bias. Even with rubrics, judgments can be inconsistent. Therefore, regular calibration sessions improve reliability.

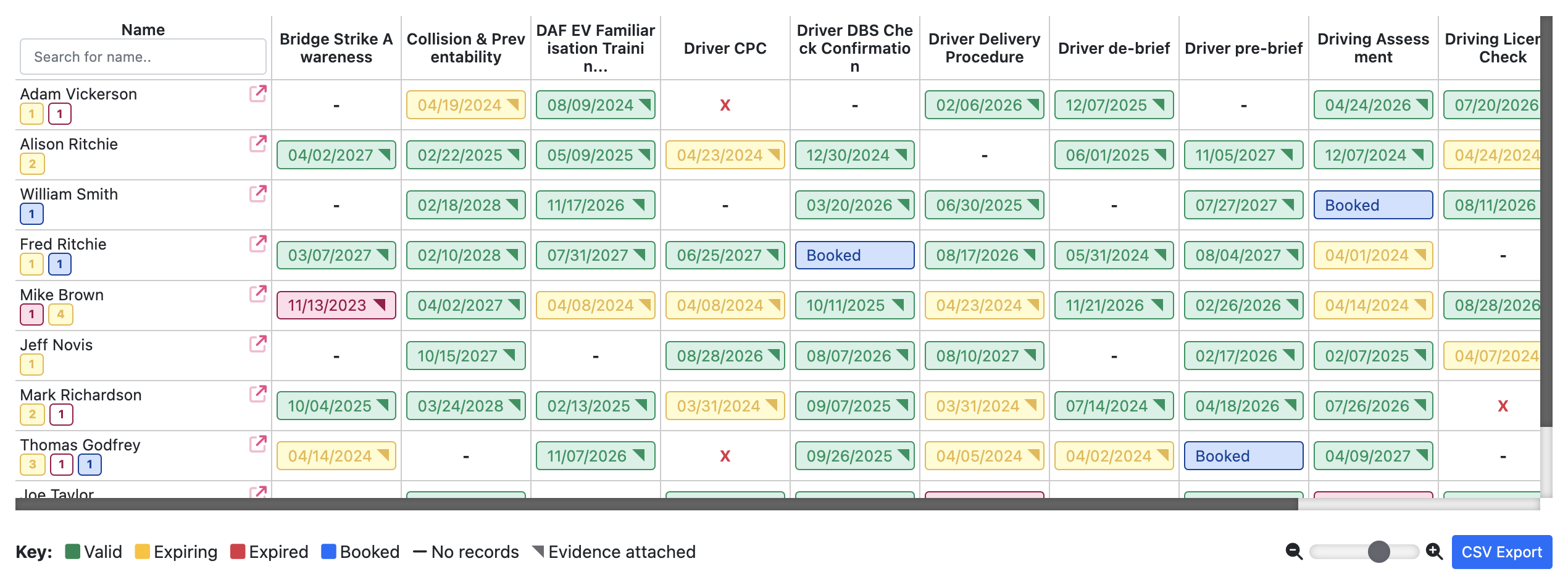

- Scalability. As programs grow, maintaining consistency becomes harder. Digital competency tracking systems can help standardise assessments across teams.

- Superficial design. Tasks that appear authentic may still fail to capture real complexity. Embedding multiple evidence types and scenario-based tasks reduces this risk.

Best Practices for 2025

- Blend quantitative rubrics with qualitative narratives for richer evidence.

- Encourage self and peer assessment alongside assessor judgment.

- Use technology such as digital training matrices to centralise data and reporting.

- Refresh competency frameworks every 2–3 years to reflect evolving skill demands.

- Always integrate feedback loops so assessment drives improvement, not just certification.

A free Self-Assessment Checklist (Docx) is available to support this reflective element. The interactive tick-box tool allows individuals to review their competencies in areas such as communication, teamwork, problem-solving, and leadership.

Embedding structured reflection is equally valuable. Schön’s Reflective Practice shows how self-assessment and feedback can transform assessment into continuous professional growth.

FAQs

How is competency assessment different from skills testing?

Skills testing often isolates single abilities (e.g., typing speed), while competency assessment evaluates the integration of knowledge, behaviour, and skills in context.

Can it measure soft skills like communication?

Yes, when these are broken into observable behaviours such as “listens actively,” “acknowledges others’ perspectives,” or “manages disagreement calmly.”

Is it suitable outside regulated industries?

Absolutely. Many companies use competency-based models for onboarding, leadership development, and performance management, even where compliance is not a legal requirement.

Conclusion

Competency-Based Assessment is not just a trend; it’s becoming the standard for ensuring learners and employees are truly ready for the demands of their roles. Recent studies confirm its potential for enhancing skills and confidence, but also highlight the need for careful design, consistent assessor training, and genuine authenticity in tasks.

If you are just starting out, begin small: identify one or two key roles, design a competency matrix, and test it with a pilot. Use the feedback to refine and scale. And remember, CBA is most powerful when treated not as a gatekeeper, but as a continuous cycle of growth.

Download both the Competency Matrix Template and the Self-Assessment Checklist to build a complete CBA toolkit.

And when you’re ready to scale beyond spreadsheets, explore how the Moralbox Training Matrix can simplify competency tracking across your organisation.

References

Alt, D., Naamati-Schneider, L. and Weishut, D.J.N. (2023) ‘Competency-based learning and formative assessment feedback as precursors of college students’ soft skills acquisition’, Studies in Higher Education, 48(12), pp. 1901–1917. Available at: https://eric.ed.gov/?id=EJ1404213 (Accessed: 25 September 2025).

Velasco-Martínez, L.-C. and Tójar-Hurtado, J.-C. (2018). Competency-Based Evaluation in Higher Education—Design and Use of Competence Rubrics by University Educators. International Education Studies, 11(2), p.118. Available at:https://www.ccsenet.org/journal/index.php/ies/article/view/70741 (Accessed: 25 September 2025).

Fawns, T., Bearman, M., Dawson, P., Nieminen, J.H., Ashford-Rowe, K., Willey, K., Jensen, L.X., Damşa, C. and Press, N. (2024) ‘Authentic assessment: from panacea to criticality’, Assessment & Evaluation in Higher Education. doi:10.1080/02602938.2024.2404634. Available at: https://www.tandfonline.com/doi/full/10.1080/02602938.2024.2404634 (Accessed: 25 September 2025).

Ananya is a Marketing Executive at Moralbox, passionate about creating content that connects learning with business impact.